Activation Function in Deep Learning: Without activation functions, a deep learning model would simply be a glorified linear regression. They’re what bring the “deep” into deep learning.

“A neural network without an activation function is like a light bulb with no switch.”

In simple terms, activation functions decide whether a neuron should be activated or not. They introduce non-linearity into the network, allowing it to learn complex patterns. But how do they work? And why are there so many different kinds?

Let us help you learn it better!

What is Activation Function in Deep Learning?

An activation function in deep learning is a mathematical equation that determines the output of a neural network’s node, or “neuron.” It takes the input signal and transforms it, either passing it through or squashing it down. This output is then passed to the next layer.

Here’s a quick analogy: imagine a security checkpoint. Not every signal (or input) gets a green light. The activation function decides which inputs matter and which don’t.

When it comes to types of activation function in deep learning, here are some popular deep learning activation functions:

- Sigmoid

- ReLU (Rectified Linear Unit)

- Tanh

- Softmax

- Leaky ReLU

We’ll talk more about each in the next section.

Before that, let’s just be thorough – what is activation function in deep learning? An activation function in deep learning is a mathematical function that determines whether a neuron should be activated or not, helping the model learn complex patterns.

Types of Activation Function in Deep Learning

Learning about the types of activation function in deep learning helps you pick the right one for your specific task. Different functions behave differently, and choosing the wrong one can lead to poor model performance.

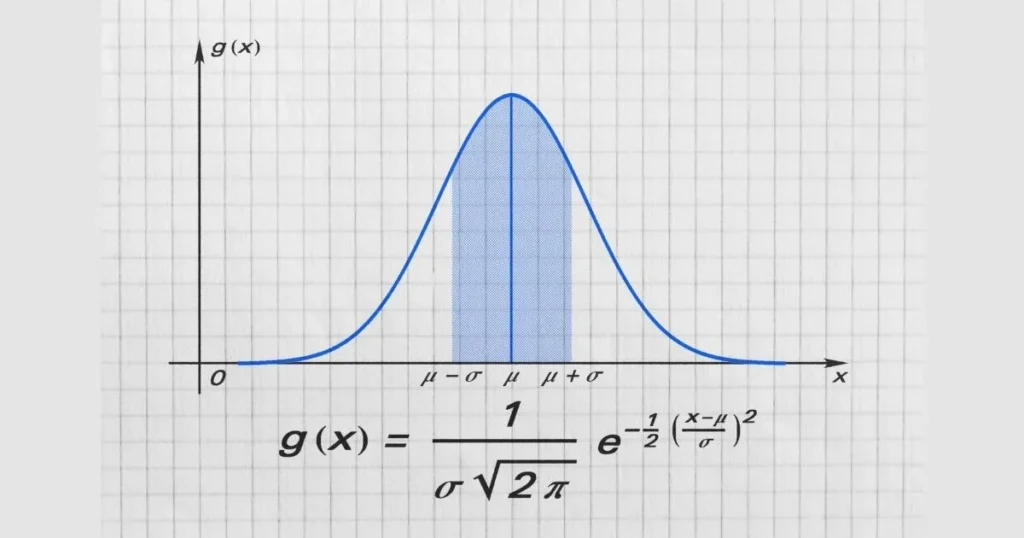

1. Sigmoid Function

The Sigmoid squashes input values between 0 and 1.

It is used for Binary classification problems. The downside is that it can cause vanishing gradient problem

“The sigmoid is like a gentle curve, it handles data with caution but may be too slow for deeper networks.”

2. Tanh (Hyperbolic Tangent)

This function is similar to sigmoid but maps values between -1 and 1.

It is good for hidden layers. But still suffers from vanishing gradients in deeper networks

3. ReLU (Rectified Linear Unit)

This is the most commonly used activation function today. It outputs the input directly if it’s positive; otherwise, it returns zero.

It is used for almost all deep learning problems, and is fast and reduces computation time. However, it can suffer from “dying ReLU” problem.

4. Leaky ReLU

A twist on ReLU, it allows a small gradient when the unit is not active. It is used when ReLU neurons die during training. And keeps learning even when the input is negative

5. Softmax

Used primarily in output layers for multi-class classification problems. It is used for Image recognition, NLP and help convert logits to probabilities.

Use of Activation Function in Deep Learning

So, what’s the use of activation function in deep learning?

Here’s why they’re essential:

- Adds non-linearity: Real-world data isn’t linear. Without non-linearity, models can’t capture complex relationships.

- Controls signal flow: Just like neurons in the brain, only the most relevant information gets passed along.

- Improves learning: Proper functions can reduce errors and speed up training.

Still wondering, how to choose an activation function for deep learning? Keep reading.

How to Choose an Activation Function for Deep Learning

Choosing the right activation function can make or break your model. Here are some tips:

- For binary outputs, go with sigmoid.

- For hidden layers, ReLU is the default pick.

- For multi-class classification, softmax is ideal.

- For deep networks where ReLU fails, try Leaky ReLU or ELU.

“There’s no one-size-fits-all. Testing and validation are your best friends.”

Applications of Deep Learning Activation Functions

Here are a few real-world examples on where activation functions play a huge role:

- Image Recognition: ReLU in CNNs improves object detection

- Speech Processing: Tanh and sigmoid used in LSTMs

- Healthcare: Softmax used for disease prediction

- Finance: ReLU helps identify fraud patterns

Why Should You Learn About Activation Functions?

If you’re aiming for a career in data analytics or data science, understanding deep learning concepts like activation functions is non-negotiable.

Are you still on the fence about whether data science is a good career? Consider this:

According to NASSCOM, India will need over 1 million data science professionals by 2026.

Here’s a Quick Checklist: When Selecting an Activation Function

- Are you working with a binary or multi-class output?

- How deep is your neural network?

- Is speed a priority?

- Are you facing vanishing gradient issues?

If you said yes to any of the above, choose accordingly.

On A Final Note…

The activation function in deep learning might sound like a small part of a neural network, but it’s absolutely central to its success. From allowing non-linear transformations to improving learning efficiency, activation functions are what truly power smart systems.

Whether you’re a student exploring deep learning activation functions, a professional planning to switch careers, or someone asking “is data science a good career?”, this knowledge is very important in improving your tech skills and knowledge.

FAQs

What is activation function in deep learning?

It’s a function that adds non-linearity to the model and helps it learn complex patterns.

Why is activation function necessary?

Without it, the model can’t solve non-linear problems and behaves like a linear regressor.

Which is the best activation function for deep learning?

There isn’t one. It depends on your model type and data. But ReLU is widely used for hidden layers.

Can I change activation functions mid-training?

It’s not recommended unless you re-train your model, as it changes how the model learns.

How to learn activation functions practically?

Hands-on training is best. Zenoffi E-Learning Labb’s Data Science and Data Analytics courses offer real-world projects to practice on.