Attention Mechanism in Deep Learning: Deep learning models have transformed how machines understand and process data. However, traditional models often struggle with long-range dependencies in sequential data.

This is where the attention mechanism in deep learning comes into play. It helps models focus on important parts of input data, improving performance in tasks like machine translation, speech recognition, and computer vision.

But what is attention mechanism in deep learning, and why is it so effective? Let’s break it down in simple terms.

What is Attention Mechanism in Deep Learning?

The attention mechanism in deep learning is a technique that allows neural networks to selectively focus on relevant parts of input data while processing information. It assigns different weights to different input elements, helping the model capture dependencies more effectively.

Imagine you are reading a long document. Instead of memorising every word, you focus on key sentences that hold the most important information. That is exactly how attention works in AI models—it decides which parts of the input need more focus and assigns them higher importance.

How Attention Mechanism Works?

Attention works by assigning weights to input tokens (words, pixels, etc.), making sure that only the most relevant ones are considered for output generation. The key steps include:

- Query, Key, and Value Calculation – Each input element is represented as a query (Q), key (K), and value (V).

- Relevance Score Calculation – The similarity between the query and key is computed.

- Weight Assignment – The model assigns different importance to each input.

- Final Output Generation – The weighted sum of values produces the final result.

A common implementation of this is scaled dot product attention, which uses matrix multiplications to determine the focus areas efficiently.

Types of Attention Mechanism

There are multiple types of attention mechanisms, each designed for specific use cases. Here are the most popular ones:

1. Self-Attention

What is self-attention? It is an attention mechanism where each input element interacts with all other elements in the sequence. This helps models capture long-range dependencies effectively.

Example: In natural language processing (NLP), self-attention helps models understand the context of words in a sentence.

2. Multi-Head Attention

What is multi-head attention? It is an extension of self-attention where multiple attention layers (heads) run in parallel. Each head learns different aspects of the input data, making the model more robust.

Example: In transformers, multi-head attention enables efficient text translation by capturing multiple relationships between words.

3. Global vs. Local Attention

- Global Attention – Considers the entire input sequence when computing attention scores.

- Local Attention – Focuses on a limited window of the sequence, reducing computational complexity.

4. Hard vs. Soft Attention

- Hard Attention – Selects specific parts of input deterministically (not differentiable).

- Soft Attention – Assigns continuous attention scores, allowing for gradient-based optimisation.

Each type has its own use case, and models like transformers leverage multiple types of attention for improved accuracy.

Advantages of Attention Mechanism

The advantages of attention mechanism in deep learning are significant. Here’s why it has become so popular:

- Better Handling of Long Sequences – Attention helps models retain important information over long sequences, unlike RNNs and LSTMs.

- Parallel Processing – Unlike sequential models, attention-based architectures can process input in parallel, speeding up computations.

- Improved Performance in NLP and Vision Tasks – Attention is the backbone of modern NLP models like BERT, GPT, and Transformers, and is also used in computer vision models.

- More Interpretability – Attention mechanisms help visualize which parts of the input contribute most to predictions.

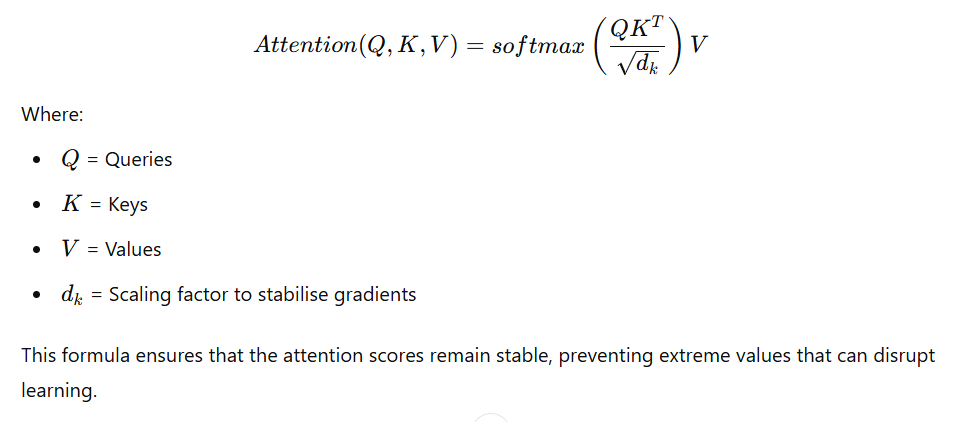

Scaled Dot Product Attention: The Core of Transformers

One of the most widely used forms of attention is scaled dot product attention. It calculates attention scores using matrix multiplications:

Real-World Applications of Attention Mechanism

Attention mechanisms are widely used in different AI domains. Some key applications include:

- Natural Language Processing (NLP) – Used in models like GPT-4 and BERT for tasks such as translation, summarisation, and text generation.

- Speech Recognition – Attention helps speech-to-text models focus on key audio frames.

- Computer Vision – Attention improves object detection and image segmentation by focusing on important pixels.

- Healthcare – Used in medical diagnosis to highlight critical areas in scans and reports.

- Recommendation Systems – Attention enhances personalised content recommendations by analysing user preferences.

With the Ze Learning Labb courses in Data Science, Data Analytics, and Digital Marketing, learners can explore these concepts and apply them in real-world projects.

How to Learn Attention Mechanism?

If you want to master attention mechanisms, here’s how you can start:

- Step 1: Learn the Basics – Understand deep learning fundamentals, especially transformers and neural networks.

- Step 2: Study Self-Attention & Multi-Head Attention – Explore concepts like what is self-attention and what is multi-head attention to see how they enhance AI models.

- Step 3: Implement Attention in Code – Use TensorFlow and PyTorch to build attention-based models.

- Step 4: Take Professional Courses – Platforms like Ze Learning Labb offer practical training in Data Science and AI, making it easier to apply these concepts.

On A Final Note…

The attention mechanism in deep learning has transformed AI by enabling models to process data more efficiently. Whether it is NLP, vision, or recommendation systems, attention is the backbone of modern AI. Understanding how attention mechanism works can help you build smarter and faster AI models.

Want to learn more about AI and deep learning? Check out Ze Learning Labb’s Data Science, Data Analytics, and Digital Marketing courses to gain hands-on experience in the field.