Candidate Elimination Algorithm in Machine Learning: Machine learning has many learning models, and one of the classic concepts in concept learning is the candidate elimination algorithm in machine learning. It helps a model move from training data to a set of logical hypotheses that match the target concept.

Beginners often ask how this algorithm works and why it is used in concept learning. So, in this blog, we will cover its meaning, working process, an example, code, and the advantages.

What is Candidate Elimination Algorithm?

Many freshers in machine learning ask this question: What is candidate elimination algorithm?

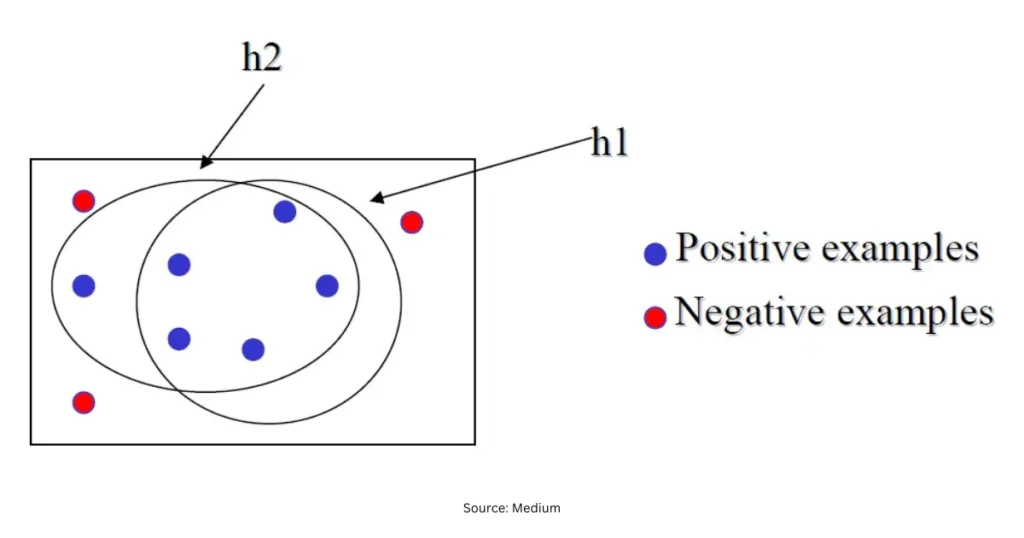

Candidate Elimination Algorithm is a concept learning method that keeps two boundaries of hypotheses – the most specific hypothesis and the most general hypothesis. These two boundaries slowly move closer as more training examples are given. This creates a version space, which is the set of all correct hypotheses based on given data.

To put it simply, the more examples you provide, the more refined your hypothesis boundaries become.

The candidate elimination algorithm in machine learning works by eliminating all incorrect hypotheses and keeping only those that remain consistent with the data.

Why do we need this algorithm?

If you are learning concept learning models, you may wonder: Why do we need both general and specific boundaries? Why cannot we just keep one?

Because in early training, the model does not know anything. It may either overfit or underfit. By slowly eliminating wrong assumptions, the candidate elimination algorithm in machine learning provides a balanced version space.

How does the Candidate Elimination Algorithm work?

It works in three simple steps:

- Start with the most specific hypothesis and most general hypothesis.

- For each positive training example, generalise the specific boundary.

- For each negative training example, specialise the general boundary.

Through these steps, hypotheses converge and give a refined concept.

Candidate Elimination Algorithm Example

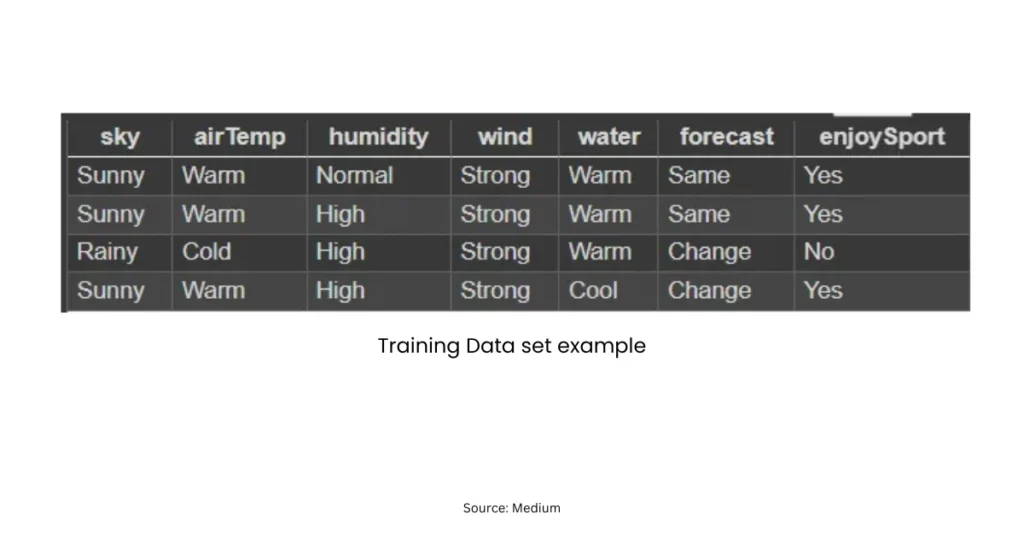

Let us take an example dataset of deciding whether a day is good for playing outdoors which is easy to understand.

Attributes: Weather, Wind, Temperature

| Weather | Wind | Temperature | Play |

| Sunny | Low | Warm | Yes |

| Sunny | High | Warm | No |

Here is how it evolves:

- Initial:

- Specific Hypothesis: [Ø, Ø, Ø]

- General Hypothesis: [?, ?, ?]

- After 1st positive example (Sunny, Low, Warm):

- Specific: [Sunny, Low, Warm]

- General: [?, ?, ?]

- After 2nd negative example (Sunny, High, Warm):

- General gets specialised:

- General: [Sunny, Low, Warm] only

This is a simple candidate elimination algorithm example showing how hypotheses shrink.

Candidate Elimination Algorithm Code (Python)

Here is a basic Python version for beginners. This small snippet helps you see how the hypothesis gets updated:

def candidate_elimination(concepts, targets):

specific_h = concepts[0].copy()

general_h = [["?" for i in range(len(specific_h))] for j in range(len(specific_h))]

for i, h in enumerate(concepts):

if targets[i] == "Yes":

for x in range(len(specific_h)):

if h[x] != specific_h[x]:

specific_h[x] = "?"

else:

for x in range(len(specific_h)):

if h[x] != specific_h[x]:

general_h[x][x] = specific_h[x]

else:

general_h[x][x] = "?"

return specific_h, general_hIf you are a beginner in machine learning, this candidate elimination algorithm code helps you visualise how specific and general boundaries are updated.

What the given code is doing (line by line explanation)

def candidate_elimination(concepts, targets):

specific_h = concepts[0].copy()

general_h = [["?" for i in range(len(specific_h))] for j in range(len(specific_h))]

concepts: a list of training instances, e.g.[['Sunny','Warm','Normal'], ...].targets: labels for each instance, typically"Yes"(positive) or"No"(negative).specific_h: initialised to the first training example. This is a common beginner shortcut (formal CE startsSas the most specific hypothesis['ϕ', ...]).general_h: created as a square matrix of"?". This is unusual. In the standard algorithm,Gis a set of the most general hypotheses, usually just a single hypothesis like['?','?','?']. Making a matrix here complicates things and leads to incorrect updates later.

for i, h in enumerate(concepts):

if targets[i] == "Yes":

for x in range(len(specific_h)):

if h[x] != specific_h[x]:

specific_h[x] = "?"

- For positive examples, the code generalises

specific_h: wherever the attribute inhdiffers fromspecific_h, it replaces that slot with"?".

This is roughly right for a single specific hypothesis, but the true algorithm also prunesGagainst the positive example.

else:

for x in range(len(specific_h)):

if h[x] != specific_h[x]:

general_h[x][x] = specific_h[x]

else:

general_h[x][x] = "?"

- For negative examples, it tries to specialise

general_hso that the negative instance is excluded.

However:- Using

general_h[x][x] = specific_h[x](diagonal assignment) is not correct conceptually. - Proper CE creates minimal specialisations of each hypothesis in

Gthat still coverSbut reject the negative example.

- Using

return specific_h, general_h

- Returns a single

specific_hand that matrix-likegeneral_h. The standard algorithm returns setsSandG.

Advantages of Candidate Elimination Algorithm

Some major advantages of candidate elimination algorithm are:

- It always tracks all consistent hypotheses, not just one.

- It reduces wrong assumptions by keeping only consistent concepts.

- It is helpful in concept learning and early-stage ML research.

- It gives a clear logical path from examples to boundaries.

- It supports version space learning effectively.

Because of these advantages of candidate elimination algorithm, it is still taught in concept learning modules in many universities and training institutes.

When should students focus on this algorithm?

If you are preparing for interviews or want to learn concept-based algorithms in depth, this algorithm is helpful. It also prepares you for understanding other supervised learning methods.

Read More: 5 Phases of Ethical Hacking: Can A Hacker Be “Good”?

On A Final Note…

The candidate elimination algorithm in machine learning gives a clean way to move from general to specific hypotheses based on training data. Anyone who wants to understand concept learning should know what is candidate elimination algorithm, how to apply it, and refer to a clear candidate elimination algorithm example and even try writing simple candidate elimination algorithm code.

It is still studied today because the advantages of candidate elimination algorithm make it useful for understanding how machine learning learns through logical refinement.

FAQs

It is a concept learning method that maintains specific and general hypotheses to form a version space.

It is used to gradually remove inconsistent hypotheses and keep only correct ones.

Version space is the set of all hypotheses that are consistent with the given training data.

It provides transparency in learning and avoids premature assumptions.