Linear Algebra in Machine Learning: Machine learning is changing the way industries work, from banking to healthcare. But behind all the impressive AI results, there’s a mathematical backbone that makes it all work, linear algebra in machine learning.

In this blog, we’ll break it down into digestible pieces. We’ll walk through basic concepts, why it matters, and how it gets used in real-world ML applications. So, if you’ve ever asked, “What is linear algebra?” or “Is linear algebra needed for machine learning?”you’re in the right place.

What Is Linear Algebra?

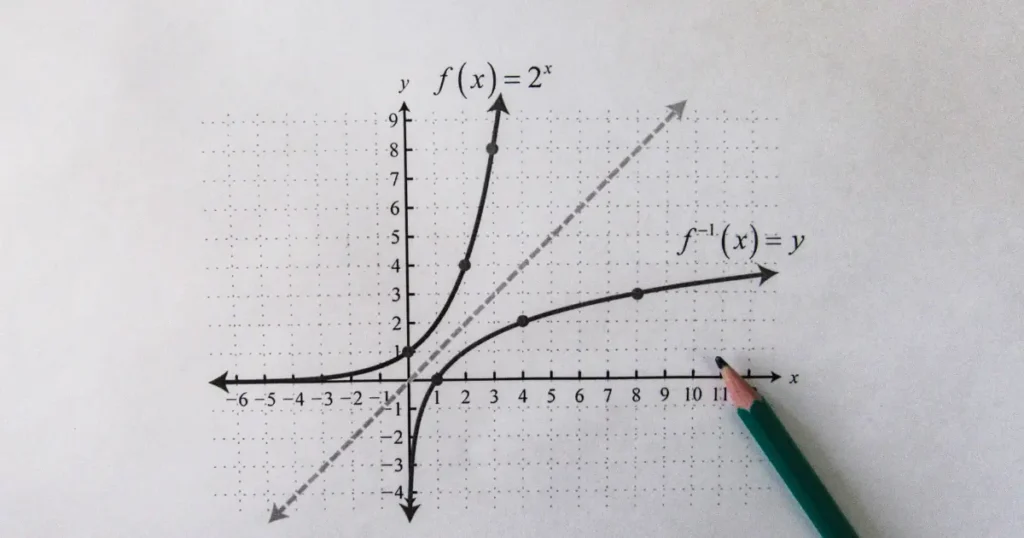

Let’s not make it complicated. Linear algebra is the branch of mathematics that deals with vectors, matrices, and linear transformations. It’s about how these objects behave, interact, and help solve equations. At its heart, it deals with equations of the form:

Ax = bWhere:

- A is a matrix (a grid of numbers)

- x is a vector (a list of numbers)

- b is the result after transformation

In machine learning, data is usually represented in matrices, and operations on data involve vector calculations. That’s where linear algebra in ML comes in.

Why Does Machine Learning Need Linear Algebra?

Now, this might seem a bit abstract at first. So, here’s the deal, machine learning algorithms need to process data, identify patterns, and make predictions. And much of this involves mathematical calculations where linear algebra becomes the go-to tool.

Here’s how linear algebra helps:

- Represents data in a structured way

- Performs mathematical operations to train models

- Reduces dimensions for faster processing (think PCA)

- Powers deep learning with tensor operations

As Stanford Professor Andrew Ng rightly said,

“Linear algebra is the mathematics of data.”

Basics of Linear Algebra for Machine Learning

If you’re just starting out, you don’t need to dive into high-level theorems. Focus on the following building blocks first:

1. Scalars, Vectors, Matrices, and Tensors

- Scalar – A single number.

- Vector – A list of numbers (like features of a dataset).

- Matrix – A 2D array of numbers (think of it as a table).

- Tensor – multi-dimensional arrays (used a lot in deep learning).

2. Matrix Multiplication

This is one of the most used operations in machine learning. Whether it’s feeding data through a neural network or calculating weights in linear regression, matrix multiplication is always at work.

3. Dot Product and Cross Product

These are used in neural networks, especially for calculating weights, activations, and optimising loss functions.

4. Eigenvalues and Eigenvectors

Used in dimensionality reduction, such as PCA (Principal Component Analysis), which helps simplify large datasets.

How Linear Algebra Powers Machine Learning Algorithms

Let’s look at some popular ML algorithms and where linear algebra fits in:

1. Linear Regression

Involves solving the equation:

y = Xw + bHere, X is the input data (matrix), w are the weights (vector), and b is the bias (scalar). Training the model involves matrix operations to optimise w and b.

2. Support Vector Machines (SVM)

Works with dot products between data points to create hyperplanes in multidimensional space. Linear algebra defines how the separation line or plane is calculated.

3. Principal Component Analysis (PCA)

This is a dimensionality reduction technique. It uses eigenvectors and eigenvalues from the covariance matrix of the dataset to identify the main axes of variance.

4. Neural Networks

Every layer in a neural network processes the input data using matrix multiplication and non-linear functions. Backpropagation, the learning algorithm, also relies on linear algebra to adjust weights.

“Without linear algebra, training a neural network would be nearly impossible,” as stated by DeepMind researchers in their 2023 whitepaper on deep learning architectures.

Applications of Linear Algebra in Machine Learning

Wondering how it works in the real world? Let’s explore some practical applications of linear algebra in machine learning:

- Image Recognition: Converting images into pixel matrices and performing convolutions

- Natural Language Processing (NLP): Word embeddings like Word2Vec are vector-based representations

- Recommendation Systems: Uses matrix factorisation to predict user preferences

- Face Detection: Eigenfaces, a technique built on PCA, uses eigenvectors

- Speech Recognition: Converts sound waves into vector signals for processing

These aren’t just theories, they’re how real AI products work today.

Read More: Python Interview Questions for Data Analyst Jobs: 5 Tips

What’s Confusing So Far?

At this point, you might be asking:

- “Do I need to be a maths expert to learn machine learning?”

- “Can I skip linear algebra if I just want to use tools?”

Here’s the truth, tools like Scikit-Learn, TensorFlow, and PyTorch make it easier to work with models. But understanding the basics of linear algebra for machine learning helps you fine-tune models, debug errors, and grasp how things really work under the hood.

So, no, you don’t need a PhD in maths. But yes, some linear algebra knowledge is needed for machine learning to get better results.

Linear Algebra Concepts Every ML Enthusiast Should Know

Here’s a cheat sheet for your studies:

- Vector addition and scalar multiplication

- Matrix-vector and matrix-matrix multiplication

- Transpose, inverse, and determinant of matrices

- Dot product and outer product

- Norms (L1 and L2)

- Singular Value Decomposition (SVD)

These topics often show up in ML, data science, and even AI research papers.

Learn by Doing: Best Resources to Master It

Don’t just read, practice! These resources can help:

- 3Blue1Brown’s Essence of Linear Algebra (YouTube)

- MIT OpenCourseWare

- Fast.ai Linear Algebra for Coders

Why Indian Students Should Learn Linear Algebra for ML

If you’re an engineering student or IT professional in India, there’s a strong demand for AI and machine learning skills. But to stand out, you need more than just Python libraries. Understanding linear algebra and its applications gives you an edge in interviews, research, and even freelancing.

Most top data science jobs prefer candidates who “understand how models work,” and that always goes back to linear algebra in machine learning.

On A Final Note…

To sum it up, linear algebra in machine learning is like oxygen, mostly invisible, but absolutely essential. It helps process data, train models, and optimize predictions. From basic operations to deep learning applications, linear algebra plays a key role in how intelligent systems understand and act on data.

So, if you’re serious about building a career in AI or ML, don’t skip this foundation. Start slow, use simple tools, and grow as you go.

Frequently Asked Questions (FAQs)

Q1: What is linear algebra and why is it used in ML?

Linear algebra is the study of vectors and matrices. It helps represent and manipulate data in machine learning models.

Q2: Is linear algebra hard to learn for machine learning?

Not at all! Start with basics like vectors and matrices. You don’t need to learn everything, just the parts relevant to ML.

Q3: Can I use ML tools without knowing linear algebra?

Yes, but your understanding will be limited. Learning it improves your ability to troubleshoot and customise models.

Q4: Are there any shortcuts to learning linear algebra for ML?

Focus only on practical concepts used in ML. Don’t get lost in theoretical proofs unless you’re doing research.

Q5: Which topics in linear algebra are most used in machine learning?

Matrix multiplication, vector operations, eigenvalues, eigenvectors, and SVD.