What is Decision Tree in Machine Learning: Ever found yourself making a decision by weighing options step by step? For example, deciding whether to carry an umbrella:

- If it’s cloudy, you think about the weather forecast.

- If rain is predicted, you take the umbrella.

- If not, you skip it.

This is exactly how a decision tree in machine learning works. It helps machines make logical choices by asking a series of yes-or-no style questions.

But what is decision tree in machine learning, technically? Let’s learn about it more here.

What is Decision Tree in Machine Learning?

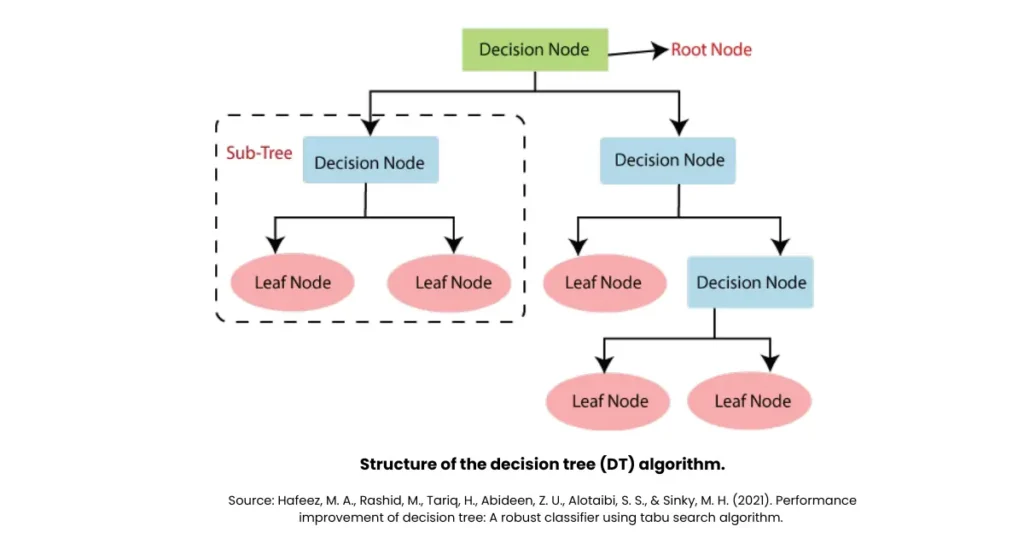

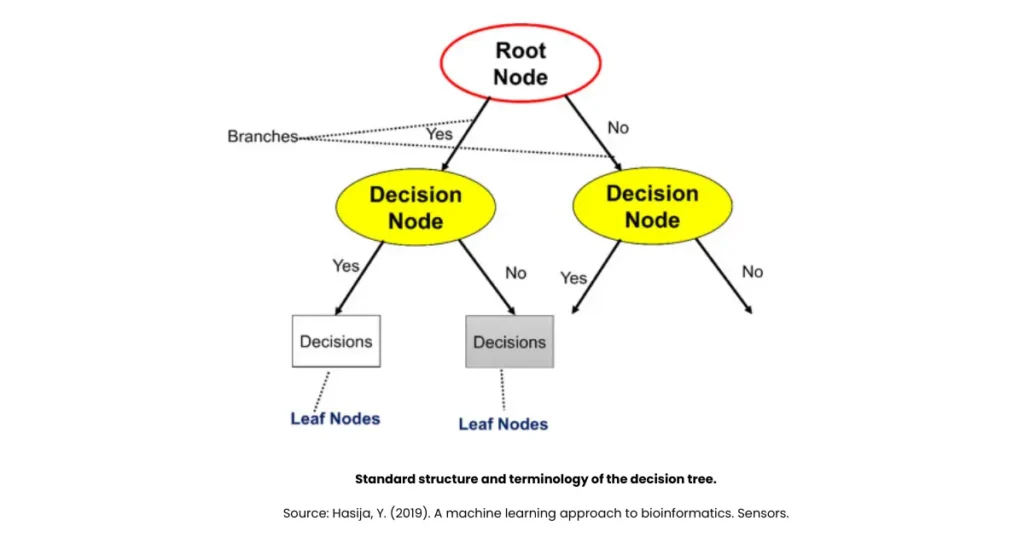

A decision tree in machine learning is a supervised learning method used for both classification and regression tasks. It represents decisions in a tree-like structure where:

- Root node represents the initial question.

- Branches represent possible answers.

- Leaf nodes represent the final outcomes.

In simple words, the model splits the dataset into smaller parts step by step, based on features, until a final decision (output) is reached.

As Tom Mitchell wrote in Machine Learning (1997), “Decision trees provide a simple yet effective way of representing classification models.”

Now, when someone asks, “what is decision tree in machine learning?” the simplest reply you can give would be: It’s a way for computers to make decisions by breaking problems into a flow of smaller questions and answers.

Decision Tree Algorithm in Machine Learning

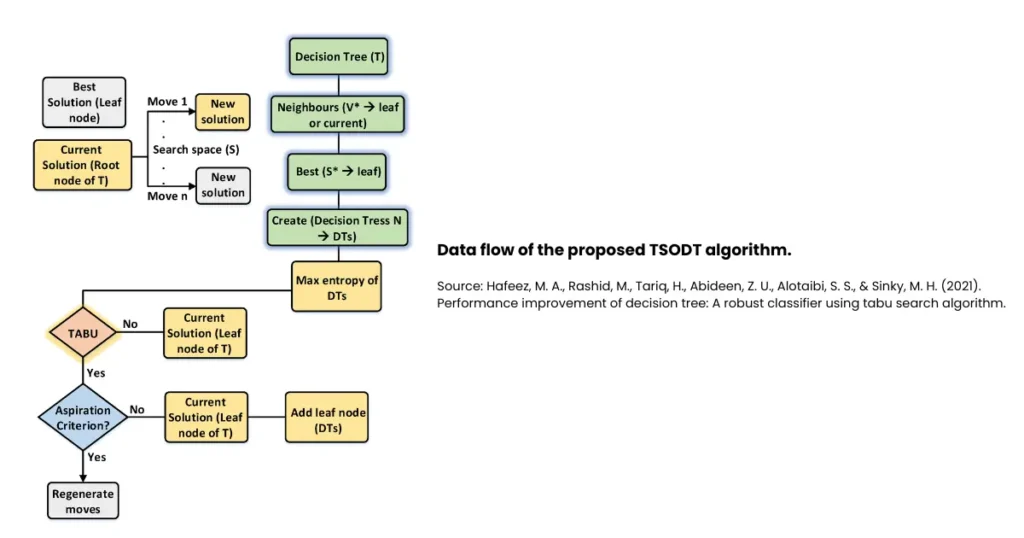

So, how does the decision tree algorithm in machine learning actually work? Let us simplify it here, step by step:

- Select the Root Node

- Choose the best feature to split the data. This is done using measures like Gini Impurity or Information Gain.

- Split the Data

- Based on the selected feature, the data gets divided into subsets.

- Repeat the Process

- Each subset is treated as a smaller dataset. The algorithm repeats the same splitting process on them.

- Stop at a Leaf Node

- The process ends when a subset can’t be split further. The result at this point becomes the prediction.

Read More: Decision Tree Classification In Data Mining Explained! Importance, Types & More

Example in words: If you want to predict whether a student will pass an exam:

- Root Node: “Did the student study for more than 3 hours daily?”

- Branch: Yes → Higher chance of passing.

- Branch: No → Next check: “Did the student attend classes regularly?”

- And so on… until the tree ends in a leaf node prediction.

This is why decision trees in machine learning are considered intuitive and easy to understand.

Decision Tree in Machine Learning Example

Let’s make it more relatable with a decision tree in machine learning example:

Loan Approval in Banking

Imagine a bank trying to decide whether to approve a loan:

- Root Node: “Is the applicant employed?”

- Yes -> Next question.

- No -> Reject loan.

- Question 2: “Is the applicant’s salary above ₹40,000 per month?”

- Yes -> Next question.

- No -> Higher risk -> Reject.

- Question 3: “Is the credit score above 700?”

- Yes -> Approve loan.

- No -> Reject loan.

This flow of questions is exactly how decision tree in ML works. It mimics how bank officers think, but with data-driven rules.

Types of Decision Tree in Machine Learning

There are different types of decision tree in machine learning depending on the problem being solved:

- Classification Trees: Used when the output is categorical (e.g., Yes/No, Pass/Fail).

- Regression Trees: Used when the output is continuous (e.g., predicting house prices).

- ID3 (Iterative Dichotomiser 3): Splits data using Information Gain.

- C4.5 Algorithm: An improvement over ID3. It uses Gain Ratio for splitting.

- CART (Classification and Regression Trees): Popular and widely used. Works for both classification and regression problems.

These types of decision tree in machine learning allow data scientists to apply the technique flexibly depending on the dataset.

Advantages and Disadvantages of Decision Tree

Like every model, there are strengths and weaknesses. Let’s look at the advantages and disadvantages of decision tree here, shall we?

Advantages:

- Easy to Interpret: Even non-technical people can understand them.

- No Need for Data Scaling: Unlike SVM or KNN, no standardisation is required.

- Handles Both Types of Data: Works well with categorical and numerical data.

- Fast and Efficient: For small to medium datasets, it’s quick to build and predict.

Disadvantages:

- Overfitting: Decision trees in machine learning can get too complex and fit noise in the data.

- Instability: A small change in data may completely change the structure of the tree.

- Bias Towards Dominant Features: Features with more levels can dominate the splits.

- Not Always Accurate Alone: That’s why ensemble methods like Random Forest are often preferred.

As researchers from the University of California once noted, “Decision trees are simple to understand but prone to instability, making them better as part of ensemble techniques.”

Decision Tree in ML vs Other Algorithms

How does decision tree in ML compare with others?

- Vs Logistic Regression: Decision trees are non-linear, while logistic regression works best for linear relationships.

- Vs Neural Networks: Decision trees are simple and interpretable, neural nets are complex and require more data.

- Vs Random Forest: A single decision tree is weaker compared to an ensemble of many trees (Random Forest).

Applications of Decision Trees in Machine Learning

The use of decision trees is widespread across industries:

- Healthcare: Diagnosing diseases based on symptoms.

- Banking: Loan approvals, fraud detection.

- Retail: Customer segmentation and recommendation systems.

- Education: Predicting student performance.

- Marketing: Targeting the right audience with personalised campaigns.

This shows that the practical impact of decision trees in machine learning goes far beyond theoretical concepts.

On A Final Note…

So, what is decision tree in machine learning? It’s one of the most intuitive and widely used algorithms that mimics human decision-making. Whether it’s used for predicting exam results, approving loans, or diagnosing diseases, decision trees in machine learning bring logic and clarity to predictions.

By now, you’ve seen how the decision tree algorithm in machine learning works, understood different types of decision tree in machine learning, and also weighed the advantages and disadvantages of decision tree.

Decision trees are powerful, easy to use, and serve as the foundation for more advanced algorithms like Random Forest and Gradient Boosted Trees.

FAQs

Q1. What is decision tree in machine learning in simple words?

It’s a supervised learning algorithm that splits data into smaller parts using questions until a final prediction is made.

Q2. What are types of decision tree in machine learning?

Classification trees, regression trees, ID3, C4.5, and CART are the most common types.

Q3. Where are decision trees used in real life?

They are used in banking (loan approvals), healthcare (diagnosis), marketing (customer segmentation), and many more fields.

Q4. What are advantages and disadvantages of decision tree?

Advantages include easy interpretation, no need for scaling, and flexibility. Disadvantages include overfitting, instability, and bias towards dominant features.

Q5. Is decision tree in ML better than neural networks?

It depends. For small datasets and interpretability, decision trees are better. For large, complex data, neural networks may perform better.